LUCID Trusted Research Environment

Work with patient-level data, alone or collaboratively, in a high-performance data science environment

Discover the new speed of real-world evidence generation

No additional IT. No black boxes. No limits to what you could discover.

“A directional answer now, or a precise answer later.” That’s a choice analysts no longer have to make. With LUCID, stage the patient-level data behind your cohort in a powerful notebook environment that supports your code, without leaving TriNetX or downloading files. Explore, analyze, and model with complete control.

LUCID is where leaders in real-world evidence generation, data innovation, clinical operations, and medical affairs find their competitive edge.

Gain transparency

Want to shine a light into the black box?

Our Advanced Analytics are ideal for getting directional answers in minutes. But sometimes you need to change test assumptions or explore the sample data in depth. With LUCID, the methods and data behind your analysis are right before your eyes.

Save time

Why spend weeks building your shell table when you can do it in hours?

LUCID connects a versatile data coding environment to our live data networks for the rapid, self-service staging of the values you need. Our powerful cloud architecture delivers blazing fast run times.

Empower your team

Struggling to get on the same page?

Two heads are better than one. Three are even better. Share your notebook with colleagues inside your organization for synchronous coding and annotation. Then export your results, including visualizations, to the stakeholders who rely on your discoveries.

Tear down silos

Looking for one place to house all your data?

Make LUCID more than just your TriNetX data environment. Upload your own data to LUCID for a central, enterprise-wide resource. Work with our team to harmonize all your data, as you consolidate environments and save cost.

Webinar

Real-World Evidence Generation: A Model Process

When it comes to bringing better treatments to patients, speed matters. Real-world evidence generation needs to keep pace. Historically, it hasn’t. Data sourcing, licensing, integration, staging, and analysis have remained fragmented processes prone to delay.

Recently, we hosted a webinar diagnosing the problems with real-world evidence generation as it’s conducted today. Featuring TriNetX Chief Scientific Officer and FDA Sentinel System co-architect Jeff Brown, PhD, the webinar shows how LUCID connects the industry’s largest federated network of EHR and claims data to a high-performance data science environment.

Watch a preview here, then make sure to access the full recording.

A straight line to illumination

Define your cohort

Apply your cohort criteria to millions of de-identified records built from EHR, labs, and claims. Use our powerful query builder for real-time counts, or work with our team of clinical data analysts.

License the data

Start our fast but thorough licensing process with a few clicks. We’ll help you make sure you’ve got all the data you need to power your analysis, and none that you don’t.

Send to LUCID

Don’t waste time downloading, uploading, or asking IT to provision more space. A few more button pushes, and the world is yours: pre-harmonized data in a clear, common-sense data model for rapid profiling, table joins, and more.

Start discovering

Lucid’s notebook environment lets experienced data analysts annotate, code, analyze, and visualize with unparallel ease. Leverage packages in R, SQL, and Python to explore, analyze, and visualize your data – independently or with colleagues. LUCID makes light work of repetitive analyses: code it once, import new data as it becomes available, and run as frequently as needed.

Put your insights to work

Building a model to find patients or calculate risk? We’ll help you bring the formula back into our live networks for broad deployment – even across global populations.

Already have data? Upload it to LUCID, where our team of informaticists can help you standardize any disparate terminologies.

The clear path to better research and drug development

Evidence Generation

Answer questions of safety, efficacy, and value for all your stakeholders, from patients to regulators.

Participant Identification

Build models for detecting unrecorded or missed diagnoses based on patient history, including lab values.

Study Design

Inform your choice of comparators and endpoints by surveying the treatment landscape and comparing outcomes.

Program Prioritization

Allocate resources across your pipeline in accordance with real-world needs and opportunities.

Probability of Technical Success

Will your trial show positive results? Predict the safety and efficacy of a proposed treatment with longitudinal analyses of real-world patients.

Why LUCID?

For regulatory-grade evidence

Tools for rapid, templated analysis play a crucial rule in generating hypothesis, optimizing study designs, and driving publication-grade research. Typically, though, they aren’t enough to support regulatory decision-making. For that, only a data science environment that gives code-proficient researchers complete control will suffice. That’s why we built LUCID. Below are a sample of publications that spell out the challenges of generating evidence fit for FDA review, all either co-authored or made possible by contributions from Jeffrey Brown, PhD, Chief Scientific Officer here at TriNetX.

Methodological transparency. Replicability. These are just some of the key principles you’ll read about—principles that informed our design of LUCID from the start.

Replication

How can you tell if evidence is strong? One test is to apply the methods to new data representing the same population under study. Deepen your knowledge of replication with this article from the REPEAT initiative, where our Chief Scientific Officer Jeff Brown serves on the advisory board.

Wang, S.V., Sreedhara, S.K., Schneeweiss, S. et al. Reproducibility of real-world evidence studies using clinical practice data to inform regulatory and coverage decisions. Nat Commun 13, 5126 (2022).

Risk

How do you monitor the risks posed by drug and biologic products? While self-reported data, like that found in FAERS, raise valid concerns, only thorough analysis from multiple data sources can help us untangle the relationships between adverse event, patient, disease, and treatment. That’s why the FDA created Sentinel.

Jeffrey S Brown, Aaron B Mendelsohn, Young Hee Nam, Judith C Maro, Noelle M Cocoros, Carla Rodriguez-Watson, Catherine M Lockhart, Richard Platt, Robert Ball, Gerald J Dal Pan, Sengwee Toh, The US Food and Drug Administration Sentinel System: a national resource for a learning health system, Journal of the American Medical Informatics Association, Volume 29, Issue 12, December 2022, Pages 2191–2200.

Reliability

The Sentinel system prioritizes “analytic flexibility, transparency, and reproducibility while protecting patient privacy.” The same is true of LUCID. See how a common data model and distributed networks work together to offer data richness while safeguarding privacy.

Jeffrey S Brown, Judith C Maro, Michael Nguyen, Robert Ball, Using and improving distributed data networks to generate actionable evidence: the case of real-world outcomes in the Food and Drug Administration’s Sentinel system, Journal of the American Medical Informatics Association, Volume 27, Issue 5, May 2020, Pages 793–797.

Regulatory Decisions

External comparator groups in controlled trials offer the possibility of faster study execution and less reliance on placebo arms. But can historical data ever compete with data from an arm that’s randomly assigned and prospectively followed? Only if carefully matched. Read about the challenges, opportunities, and best use cases for such groups.

Carrigan, G., Bradbury, B.D., Brookhart, M.A. et al. External Comparator Groups Derived from Real-world Data Used in Support of Regulatory Decision Making: Use Cases and Challenges. Curr Epidemiol Rep (2022).

For prediction and inference

Interpolation. Extrapolation. Pattern recognition. More and more, our new discoveries take the form of seeing the forest for the trees. Or at least, making a justified prediction of which tree will grow next. But it isn’t enough to do this anecdotally. When health is on the line, statistical rigor is paramount. Fortunately, there’s now more technology to achieve that rigor than ever before. The cutting-edge now lies at the intersection of that technology and ample, timely data. Enter LUCID.

Prediction

Predictive models work best when powered by large, representative datasets marked by a wealth of clearly labeled features. That’s just what federated networks like ours provide. Add powerful tooling for regression and classification, like that available in LUCID, and you’ve constructed a complete machine learning laboratory.

Appelbaum L, Kaplan ID, Palchuk MB, Kundrot S, Winer-Jones JP, Rinard M. Development and experience with cancer risk prediction models using federated databases and electronic health records. In: Linwood SL, editor. Digital Health. Brisbane (AU): Exon Publications. Online first 2022 Feb 02.

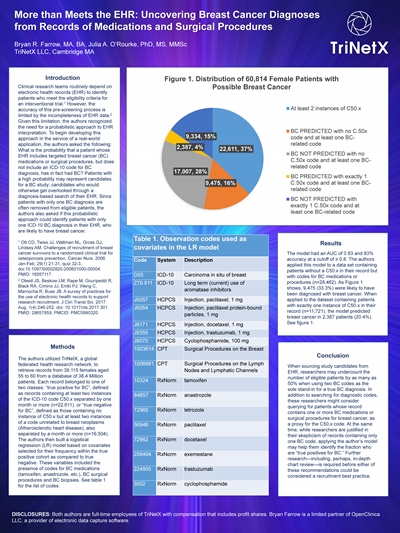

Inference

EHR data is rich, timely — and often incomplete. As patients travel between specialists for care, self-contained records become increasingly partial. But well-established associations, like those between certain medications and the diagnoses they’re intended to treat, let us reconstruct those parts of a patient’s journey left out of the available record.

Farrow B, O’Rourke J. More than Meet the EHR: Uncovering Breast Cancer Diagnoses from Records of Medications and Surgical Procedures. Poster presented at: AMIA 2020 Virtual Annual Symposium; Nov 14-18, 2020; online.

For classification and regression

Daniel Liu, MD, Assistant Professor at the University of Arkansas for Medical Sciences and Arkansas Children’s Hospital, is a model user, literally and figuratively. As a physician with experience in machine learning, he turned to the one-stop-shop of LUCID to save time in building two models for patients presenting with Croup. A classification task asked if these patients would get admitted, while a regression task attempted to predict length of stay. See how LUCID enabled Dr. Liu to move from dataset to hyperparameter tuning in record time.